Welcome to this first article in the series about TDSD – Test-Driven Systems Design, which is a method for all kinds of product (system) development in the software domain, especially suitable for big software systems.

TDSD is, except from our organizational principles, also built on the deductions made in System Collaboration. It is already in the understanding of the deductions, where the test-driven systems design concept actually is concluded to be a necessity for any product development domain. The deductions in System Collaboration explains also the prescriptive HOW steps that need to be followed and WHY they need to be followed, all in order to be able to fulfil our organizational principles in this complex context (all principles activated), which in turn strongly decreases the solution space for our way of working. In TDSD we are taking the last step, by finally going into the domain and domain knowledge, skills and experience of the organization, the possibilities of how to do the further enhancements of the HOW, to be able to complete the TDSD for software method. Of course, TDSD also fulfils all the organizational principles, to achieve the highest quality assurance possible for TDSD, with no built-in root causes from start, which gives us the best possible chance to achieve quality assurance in our products. TDSD is by that, taking care of and solving the current, and unfortunately, too many common problems of today, when developing big software systems with current commercialized methods and frameworks. As the observant readers we are, we can understand that even though TDSD for software, is focusing on software, it can with small adaptations, also be used for hardware or as a hybrid. This due to the fact that the organizational principles activated in a specific context, as is concluded in System Collaboration, always are domain independent. In fact, this directly means that the test-driven systems design approach, is valid for all product development.

Introduction

That most commercialized methods are antisystemic, was brought up by Dr. Russell Ackoff already in the 1990s, where he listed twenty of them from his mind, and not a complete list. Some of them have been dissected, to understand why they are failing, and can be found in this series of blog posts, with reference to Ackoff, and here is also a general part on how and why it is important to dissect methods in this blog post. Even though science about organizations have been available for decades, and even already in the 1990s, this list of antisystemic methods available on the market today is unfortunately considerably longer. This also yields recently new methods and frameworks for product development of big software systems, where some of them have induced even more, as well as added new problems, that we did not have earlier. It is always important to understand the past in order to build new methods on old valid and proven experience. For example, why waterfall way of working with project succeeded to fly to the moon, but why it failed to make big software systems already in the 1980s. The failure depends not only on that the limitations in the waterfall method were not understood, but also due to not following the waterfall method properly, mostly lacking the virtual delivery structure and that making completely new systems, means that there is transdisciplinary complexity to reduce. The difference between hardware and software product development, between Technology Push and Consumer Pull and the reason to why there sometimes is failure, is deep-dived in this blog post series.

Here is a brief explanation of the coming chapters in this article. To be extra clear about the need of TDSD, it is dissected in the first chapter “Why TDSD? Do we not have too many software methods already?”. In the next chapter “TDSD has the deductions made in System Collaboration as its foundation”, we will further go through why it is a necessity that TDSD for software and hybrids, and any product development method as well, originate from these deductions. It is also important to understand that when working in a complex context, there are certain steps that need to be taken, also clearly shown in the deductions made by System Collaboration. The consequences of that are that any way of working in product development need to be a method, and that it per se can never be a framework, which is concluded here, and which also of course means that TDSD is a method.

The coming chapters in this series of articles explaining TDSD in detail are:

– Planning an initiative

– Systems design and systems test

– The portfolio team

– The Wholeness team

– The Team of teams

Warm welcome to TDSD!

Why TDSD? Do we not have too many software methods already?

The aim of TDSD, is to focus on fulfilling the science of the organizational principles, since there are too many methods and frameworks with too low level of fulfilment of them, generating an immense number of problems in the organization. And as the consequences stated above about the need of product development to be a method, this means that cherry-picking methods or sub-methods from a framework, trying to aggregate to a total way of working for product development, is also wrong per se. By only focusing on the fulfilment of the organizational principles, TDSD is giving the people in the organization the right prerequisites for achieving a flourishing way of working. In this way, TDSD is also never more prescriptive than necessary, leaving slack to the organization depending on its domain, skills, competences, capabilities, experiences, people, tools, etc. The lack of appropriate methods or frameworks for software development of big software systems for the complete software life cycle, generating a tremendous number of built-in problems for today’s organizations, is therefore simply the reason for why TDSD has been developed. TDSD is derived from fundamental science, the organizational principles of people and activities, which in turn gives the deductions in System Collaboration. This is the same way as we need to follow Archimedes’ principle during the product development of our new boat, if we want it to survive the launching, and plenty more science if it is going to survive the first storm. But TDSD is also suitably backed-up of the available evidence-based problems from methods and frameworks that are antisystemic. When built on science, showing this anti-systemicness of other methods and frameworks, which is done in this article in SOSD about common root causes, is of course not needed. But it gives us the understanding of what is not an appropriate solution, a kind of anti-hypothesis, i.e., what solutions we should not bother, and not try again. This gives hopefully more confidence for you as a reader, in your struggle to change your though patterns. By finding the root causes to these built-in problems, due to the anti-systemicness of a method or framework, gives invaluable experience on not only how to not do the solution. By deducing, including also all these experience from the past, TDSD will have solutions from different old methods, and can therefore definitely be said to be a hybrid. In short it can be said that from waterfall way of working with projects, the very important top-down systems design and the need of long-term planning (top-down as well) of the product (and organization) is taken, from Lean Product Development, the iterative thinking with fast feedback loops by exploration of the product’s systems design is taken, and finally from Agile, the iterative thinking with fast feedback loops to understand what the customer really need, to reduce uncertainty, is taken.

TDSD has the deductions made in System Collaboration as its foundation

As mentioned in the series of articles about the deductions in System Collaboration, the line hierarchy is a solution that we will have in any bigger organization. This due to the fact that the line hierarchy is reducing the complexity when having many people, to get a structure and overview that we humans can handle. This means that to make any way of working easier to understand in any context and domain, we always use structures in order to reduce the complexity. Here it is also important to repeat that every structure that involves people, need to follow our organizational principles for humans of 5, 15, 150 etc., see this blog post. The numbers of 5, 15 and 150, give us some hint about some maximum size about just over 2000 persons in a rather flat virtual delivery hierarchy (flat is needed in order to decrease too many and too long chains of interactions making us lose information and trust, and probably also due to the fact that going over 200 persons doing things together, meant dividing into two parts, which also can be part of our DNA, so we can trust that our 150 persons leader, trust other 150 persons leaders), when doing really big delivery initiatives (not recommended). This is the biggest implementation that can be made, since the science is the (positive) constraint, see this blog post for more information and a picture. Regarding line hierarchies, which are like resource pools, the size has really no limits. For repetitive work that is truly aggregating parts, it is also possible that every manager will also act as a team leader and specialist on the process, which actually means that this virtual delivery structures (many different teams), each one of them, is delivering aggregated predetermined parts repetitively. Here we will also have other limitations, for example infrastructural aspects in hardware manufacturing, pointing more on other science, then our organizational principle for humans for a maximum team size of 15. Due to the presence of the line hierarchy as the primary foundation of any bigger organization, the line hierarchy will not be handled separately with more information here in TDSD, so all the details about why and how will be found in System Collaboration Deductions – The line hierarchy.

In product development, to avoid sub-optimization, we need to add the virtual delivery structure, which is responsible for the deliveries, a necessary setup in order to avoid classic sub-optimization. The reason for the need of virtual delivery structures in product development, is mainly due to the fact that a silo in the line hierarchy is not seeing the whole, making them unwillingly to have their own agenda, which sub-optimizes the delivery from the whole. From the theory behind the deductions in System Collaboration, we can draw the conclusion that in order to properly implement even the line hierarchy with its silos, we first need to understand the overviewing solution to the way of working in the context we are operating in. This means in this case the basic understanding of product development, that it is about reducing disciplinary complexity (only for hardware), as well as transdisciplinary complexity/complicatedness for hardware, software and hybrids (systems design and systems test), before we can understand how to implement the virtual delivery structures and a portfolio, as well.

And without fully understanding the systemic organizational systems design solution of the line hierarchy, the virtual structures, the systems design/test and the portfolio, it is impossible to fully implement a flourishing method. But, with common sense and the experience of past problems, mistakes and failures in antisystemic methods and frameworks, and with the help of the organizational principles and all deductions that can be done, we know the prescription we need to follow. Adding the last part, the domain, as is done in TDSD, is where the experts of the organization can guide us, and also dissolve some minor problems that we definitively will find on our journey to a flourishing organization. This means that the implementation of TDSD in order to get cohesiveness between the parts of the organization, is straightforward, where the prescriptive HOW from the deductions will help us a lot. TDSD is to some degree prescriptive, but only to the level that the organizational principles are followed, which mostly will be the principles for us humans when adding the last part in order to achieve TDSD. This gives the organization and its people the best prerequisites and understanding on how to guide themselves in the implementation/ change/ transformation, since they are the best to know their domain and domain skills, knowledge and experiences of their people.

When talking about knowledge for product development, we can divide them into four different categories, just to understand that there are different kind of knowledge. We have the domain knowledge of the organization, i.e., the core business of the organization. But in our second category we also have other specific knowledge that we need from other domains, like law and cyber security that can be needed in software development. This kind of knowledge is not the core business, so everybody does not need to know everything about it, and therefore is more of a centralized kind, known only by a few persons. The third one is the disciplinary knowledge, knowledge that is domain independent, but instead is context dependent knowledge. For the complex context of product development, we will have disciplines like; requirements-, implementation-, or test engineering, that of course are spread all over the levels of the organization doing the actual product development, but that also is different, depending on the level. The last category of knowledge is the transdisciplinary knowledge, that is dedicated to the complex context of product development, and that we will not see in a clear context like production. Transdisciplinary knowledge is about iteratively performing analysis and synthesis of the requirements meaning that not only participants knowing about the whole system, but also participants throughout all the other (existing, but new ones may be added, new combinations, etc.) disciplines mentioned above need to be present, i.e., where the latter means people with the overall knowledge about the respective subsystems within the architecture. This is about working with systems design, so that knowledge about the wholeness of the system is iteratively gained, until we have a solution with subsystems, that gives a united, unified and well-functioning whole. It is important to point out that by having subsystems with clear interfaces between them, fulfilment of Conway’s law can be fulfilled when we now are going to continue with the different teams that are going to find the solution to our system. Maybe even more important to point out, is that by having parts, with interfaces all over the system, will never give fulfilment of Conway’s law, which easily leads to failure, the bigger system will increase the probability of failure, since the complexity has not been eliminated.

All our employees of course have domain knowledge, other specific knowledge, disciplinary and transdisciplinary knowledge to different degrees, and the balance strongly depends on which level our employees are working on. This mix of knowledge will occur automatically and naturally during work as usual, i.e., in the same way as a new employee continuously will learn the needed knowledge from the different categories. The top levels are generally requiring overviewing domain knowledge and more structural parts of the disciplinary and transdisciplinary knowledge, while the bottom levels are requiring more detailed sub-domain knowledge and less structural parts of the disciplinary and transdisciplinary knowledge.

At the different levels, we also need different kind of specific knowledge from other domains as well as mentioned above. This knowledge, is normally knowledge is difficult to acquire, or can be confidential, or both, like law or cyber security. But this specific knowledge is often a very important part of the transdisciplinary work with the systems design, like cyber security. The reason is that this knowledge often is related to non-functional requirements, which requires a top-down approach from start, which in turn leads to that it is of a more centralized kind, and is therefore naturally found at the top level.

As we have shown in the System Collaboration Deductions series, the transdisciplinary work with the systems design level by level is needed for reducing the complexity. At the same time this will significantly reduce our cognitive load, since we are not anymore trying to solve insolvable symptoms, originating from bottom-up aggregation. The resulting top-down approach, which creates the levels top-down one by one, also further reduces our cognitive load, so we for every needed job position, with different blend of the mentioned categories of knowledge, can concentrate on what we are best at. And this cognitive load has nothing to do with complexity, it is just a limitation in our human capacity. This means that cognitive capacity limitations of this kind, is context independent, and is the reason for that a team never is manufacturing a whole car, since there are too many elements to learn and remember, when manufacturing a whole car. Of course, we need T-shaping for our employees within every level, as well as between the levels, which we will deep-dive into later in this series.

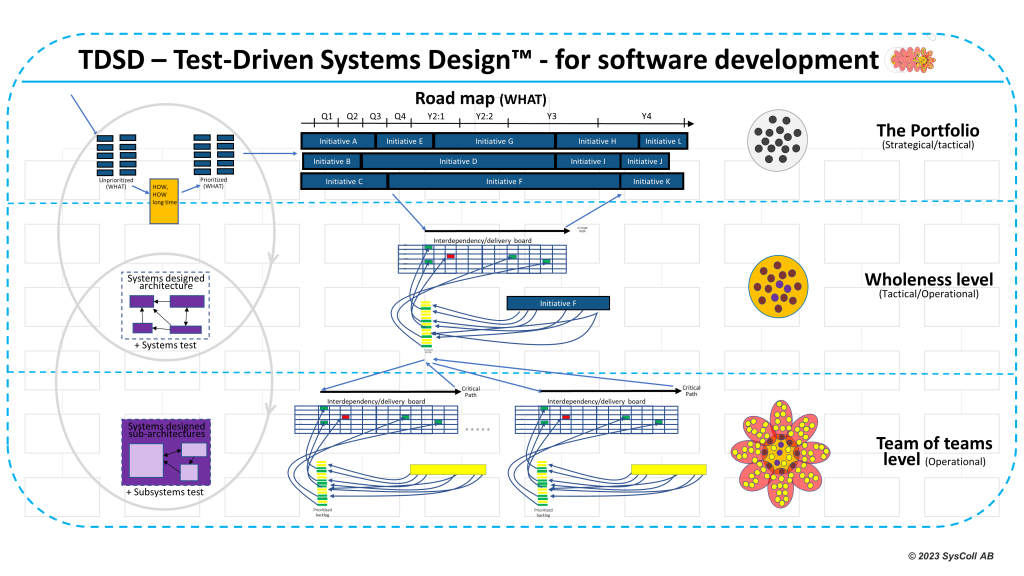

By going through the diagram in the summary article of System Collaboration Deductions, a diagram that starts at the top with the domain and purpose, we will come all the way to the appropriate method for the actual product development, i.e., go into the domain, and use TDSD in our case. As we can understand, the experience and science already found out by mankind, that in many cases has turned into (true) common senses, makes it much easier for us to also make a good implementation of TDSD, as well as HOW and WHY it works. This means already from start with our first try, as already stated, where the one-way track in the diagram, gives us a sunny start on our first Walk in the Park. And where, as also concluded earlier, the problems of antisystemic methods and frameworks gives tremendous valuable information, so we are sure how we shall not do that (not even try), which for some of us means a complete change of thought pattern. The reason is that the failures of anti-systemic methods and frameworks, are showing anti-Best Practice, failing due to their inability to fulfil the organizational principles. For example, problems when scaling agile, which also is exemplified in this article about common root causes in SOSD, as mentioned above. From this we can understand that our chosen method TDSD, with an organizational systems design as described in the article System Collaboration Deductions – Top-down synthesis, will fulfil our organizational principles, and is really the only way forward. With all information about the prescriptions we need to follow from the deductions made by System Collaboration, as well as the above information, we here have an overview of how TDSD looks like, where we are never more prescriptive than needed; TDSD overview.

The science and the System Collaboration Deductions gives per se that desirable and mandatory non-functional requirements on our way of working, e.g., scalability, stability and flexibility will be fulfilled, and that cybersecurity with easy measures, will be fulfilled. We can understand that the combination of the line hierarchy and the virtual structures are suitable for covering different type of scalability, stability and flexibility. First, we have the line hierarchy with its stability, accountable for strategic and tactical work that are slower and having longer feedback loops, but that still having the possibility to scale, in order to increase or decrease in size, without difficulties. Then we have the flexible virtual structures with short feedback loops, that have full flexibility and easily can be scaled to any size, which is a perfect match for doing the operational work, as well as support parts of the tactical work.

Next article in the TDSD series is about the need of always planning an initiative.