This is the fourth part of a series of blog posts regarding the way of working history.

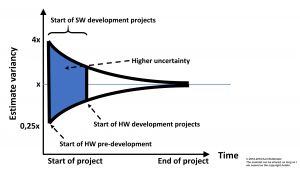

I believe many of you already have heard of the problems that large software projects experienced at the introduction of software development, which may be less in this century, but still not properly cured. These problems that have no equivalence in hardware development, at least in the latest half a century. In fact, it was sometimes said that for software development in the end of the last century, you should multiply the original time estimate with pi and the cost estimate with pi square! This led to an update of the Cone of Uncertainty by Barry Boehm 1981 [1], where he stated the estimate variance 0,25x to 4x for software development projects [1]. Steve McConnell in 2006 [1], when he also coined the expression Cone of Uncertainty, states that the decrease of estimate variance closer to the end of the project cannot be guaranteed. The updated Cone of Uncertainty with values for software product development looks like this:

Hardware projects making Technology Push, where research, pre-studies and pre-development normally were not a part of the project, started many decades earlier then software projects. Of course, they also faced their problems, and still until this time, especially now when the market requires more and more speed. But, even though silos with KPIs give inherent sub-optimisation, and therefore is inappropriate for high speed even when having projects, the problems for hardware projects were never of the same magnitude as for software projects. Importantly enough to add – software development used the same waterfall way of working.

From my own software engineering time, the easy answer is that software is more complex to develop. There are more degrees of freedom and therefore also more prone to errors. The architecture is ‘hidden’ and can be our own entanglement, and it is much easier to skip an integration event if we are late., which will not be done in hardware, since that is too risky. In hardware we know that we need several prototypes with integration points due to the complexity of following legal aspects like EMC, lightning, mechanical issues, IP, but also visible quality for example. Our architecture is directly shown as well, and the customers don’t like an entanglement of cables, so a proper systems design of any hardware product is a must, which means reducing the transdisciplinary complexity/ complicatedness. But, in software development it is possible to think that maybe this time we don’t have the big bang when we only do the very last integration event, i.e., no prototypes at all. Because this time is different; our specifications are better and our staff do not make any mistakes anymore. Right. But, on the other side, software does not have the need of reducing the disciplinary complexity, which means no need of doing research in the same way as hardware has. Research that in hardware aiming for smaller, less expensive, more efficient, less battery consumption, less heat dissipation, etc., parts of the total product, the market requires cheaper and better products. For many software products, the software only needs to change to some degree if the hardware is changed, since if the software works, why should we change it. And here we clearly have a big difference between hardware and software, the hardware is generally more incremental, but the software is generally new. And when having completely new hardware, it is more of Technology Push, the market does not wait for the hardware, but with software it is Consumer Pull which means high uncertainty for organisations developing software.

This means that Technology Push are all about efficiency (do the things right), you need to do the things right in the project, since you already know what to do (pre-development and innovations are already done). And your systems design is only slightly changed, due to the incremental updates of vacuum cleaners, cars, or whatever the hardware is. This means that a couple of prototypes will get the new cohesiveness of the product to work as a unified and well-functioning whole. Consumer Pull was there almost from the beginning for software development, but not to the same degree for hardware development, even though it is coming more and more in today’s market, and will certainly increase in the 2020’s. Compared to Technology Push, Consumer Pull is instead a lot of effectiveness (do the right thing), since the customers are picky, but we shall not forget that customers do not want to wait.

So, at the start-up of large software projects it was not only sometimes a new technology in the picture, we had also immaturity from both software development side and the customer side. The customer side didn’t know what to ask for and the software development side didn’t know that the customer side didn’t know what to ask for. We can also add the end user, to make it more complicated, but you get the point. And if we are not clear on our customers’ need, how in earth can we then choose technology? And then, with all this uncertainty, a contract was made. And contracts must be kept. In the Cone of Uncertainty, it will look like this when comparing hardware and software development projects:

This means that from the beginning of the software development projects, we had not only uncertainty, but also complexity in the picture, since the requirements were not yet set. Most of the time, this meant only an increased uncertainty, but it could also mean that in the beginning of the software projects we need to start to gain knowledge during a long period of time in order to know what to do. And with the long phases of waterfall way of working, a considerable amount of time then passed. This complexity increased the uncertainty, but if the complexity could not be solved fast enough, it also meant that more of the projects failed, which also was the case, even though it become better over the years, mostly for smaller projects [2]. If we also consider the above about complexity, an update of the Cone of Uncertainty would look like this:

We have a red area where it is not healthy to be, and if we take a comparison with the Cynefin™ framework [3] it means that we believe we are in the ordered domains, but in reality, we are in the Complex domain. This goes hand in hand with the fact that the estimate variance of 0,25x to 4x for software development projects are only best-case scenario, meaning that with complexity out of control, it will easily be worse. Under the x-axis the red colour is faded due to it is more likely that the complexity will add considerable time, even though a disruptive innovation sometimes could be a game changer.

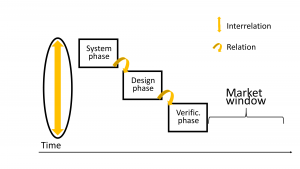

When we have high transdisciplinary complexity, especially in big systems in any domain, we need to take care about the systems design of the total system, and of course also the systems design of the parts. This means that we need to have enough cross-functionality competence in the same boat, as well as the customer and end user from the beginning. We need to work transdisciplinary and do as short iterations as possible in order to gain knowledge about our system, about how our parts are working together. And here we have the really big difference between hardware and software, as we also mentioned above. Hardware is generally more incremental, making the waterfall way of working with projects a perfect match. But making a completely new system, in any domain, requires iterations of the systems design as well, the more complex, the more in advance before the development of the parts start. And here waterfall way of working with projects does not fit the purpose any more, but Lean Product Development does. In Lean Product Development, when making new systems, like a new platform, means to take a lot of parameters into consideration when making the systems design of the platform, making iterations to a necessity. More thorough specifications have been the attempt for a way forward when the software projects were failing, but too transdisciplinary complexity can never be reduced that way, and neither we shall take the risk when having transdisciplinary complicatedness (requires prototypes). It is simply too complex for us humans to sort out, we need to make iterations of the systems design, until we have iteratively gained enough knowledge (the left oval in the picture below) from the test results, so we can start the project or initiative, where we reduce the transdisciplinary complicatedness.

But it is important to understand that this transdisciplinary way of working in the oval, then continues in all the coming phases, the picture above is only schematic.

As we have seen, there are many reasons why software development of especially big systems has been failing and unfortunately still are, and that Consumer Pull with the uncertainty of what the customer need, is not the only reason. For software systems we need to, much more frequently than for hardware, consider making a new systems design, where the backend will be the main part. And this is when the contract is already signed, and the customer is waiting (Consumer Pull as well), compared to hardware, where completely new products did not have customers waiting (Technology Push). This means that many software systems are brand new systems, which means considerably transdisciplinary complexity that need to reduced, when we are making the systems design. Therefore, this new systems design requires iterations that is not part of the traditional waterfall way of working, and where we instead need to look at the start of Lean Product Development, where the systems design is explored with experimentation. This also means that we need to learn to divide the uncertainty of what the customer need (a UX component) and the complexity of making the systems design for the backend. This is important since some domains, like banks, insurance companies, governments and many other service companies, mainly have the complexity of making the backend and not the uncertainty of what the customer need. If we add also that these backend systems will be up and running for decades, makes the systems design even more important to achieve fast. And to achieve this as soon as possible, means some kind of skeleton thinking, with the advantage that also the systems test environment and the systems test cases of the system’s non-functional requirements will have an early start of implementation. With the need of a skeleton for big (software) systems, we also understand that the concept of emergent design/architecture is not possible, since it means no hypothesis, but instead only aggregation of the parts. Emergent design/architecture is a concept used in small software systems, which often has short life-cycle, is non-secure and non-safe, etc., meaning that it is (sometimes) possible to refactor them and make the systems design in hindsight. As always need to be vigilant when we are changing context for a method, see this blog post for more information.

Of course, we need to make an awesome UX for the customers, but with a UX component connected to the backend part via well-defined APIs, where the backend at first can be simulated, this is manageable. And the UX part can most probably be developed simultaneously with only some time delay to get some skeleton of the systems design up and running. But, to get the skeleton up and running, the understanding about the UX is of course vital, which means that collaboration with the customer and end users will be an input to the systems design of the skeleton. In order to reduce transdisciplinary complexity/ complicatedness, we always need to gather a transdisciplinary team with all the different disciplines, where UX of course is one of the disciplines.

Now we need to put everything together, but we must not forget how the market has changed until today. It is all wrapped up in the next blog post, part five and the last one in this series about the way of working history.

C u then.

References:

[1] Boehm, Barry. The Funnel Curve. The Cone of Uncertainty.

https://en.wikipedia.org/wiki/Cone_of_Uncertainty

[2] The Standish Group. CHAOS Report 2015.

https://www.infoq.com/articles/standish-chaos-2015

[3] Snowden, Dave. The Cynefin™ framework. Link copied 2018-10-04:

https://www.youtube.com/watch?v=N7oz366X0-8